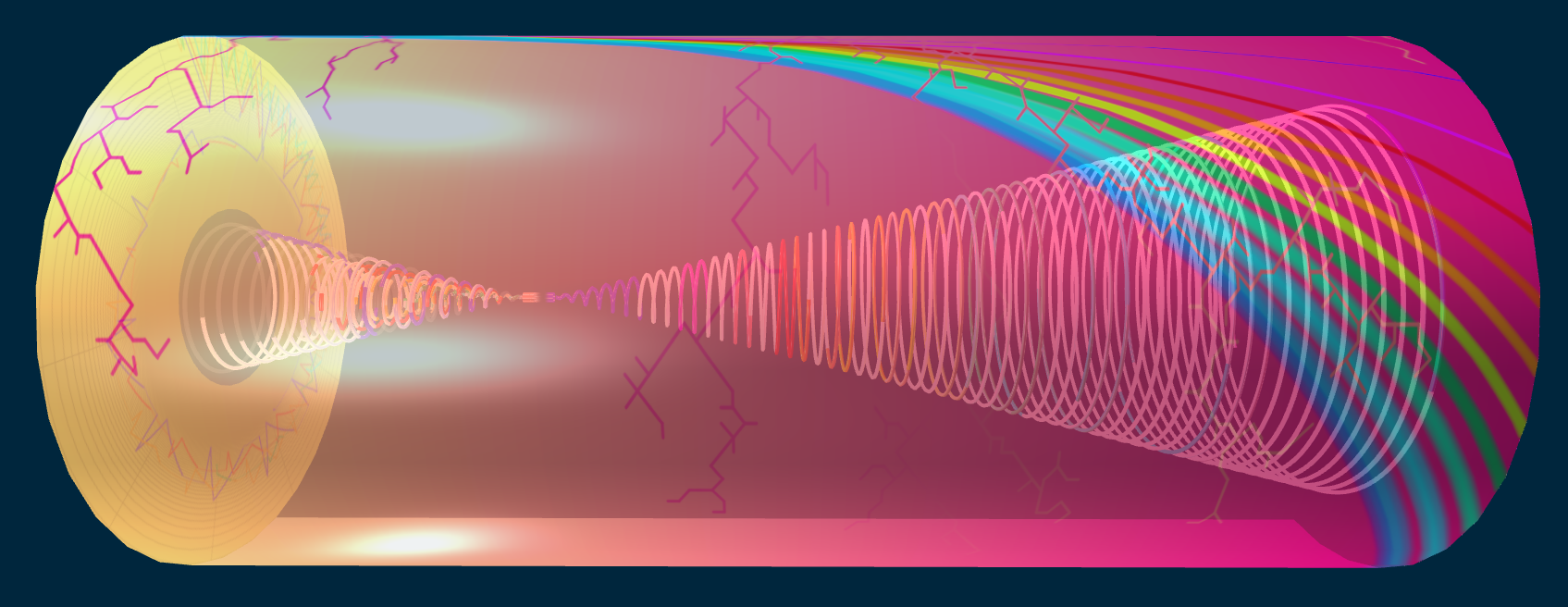

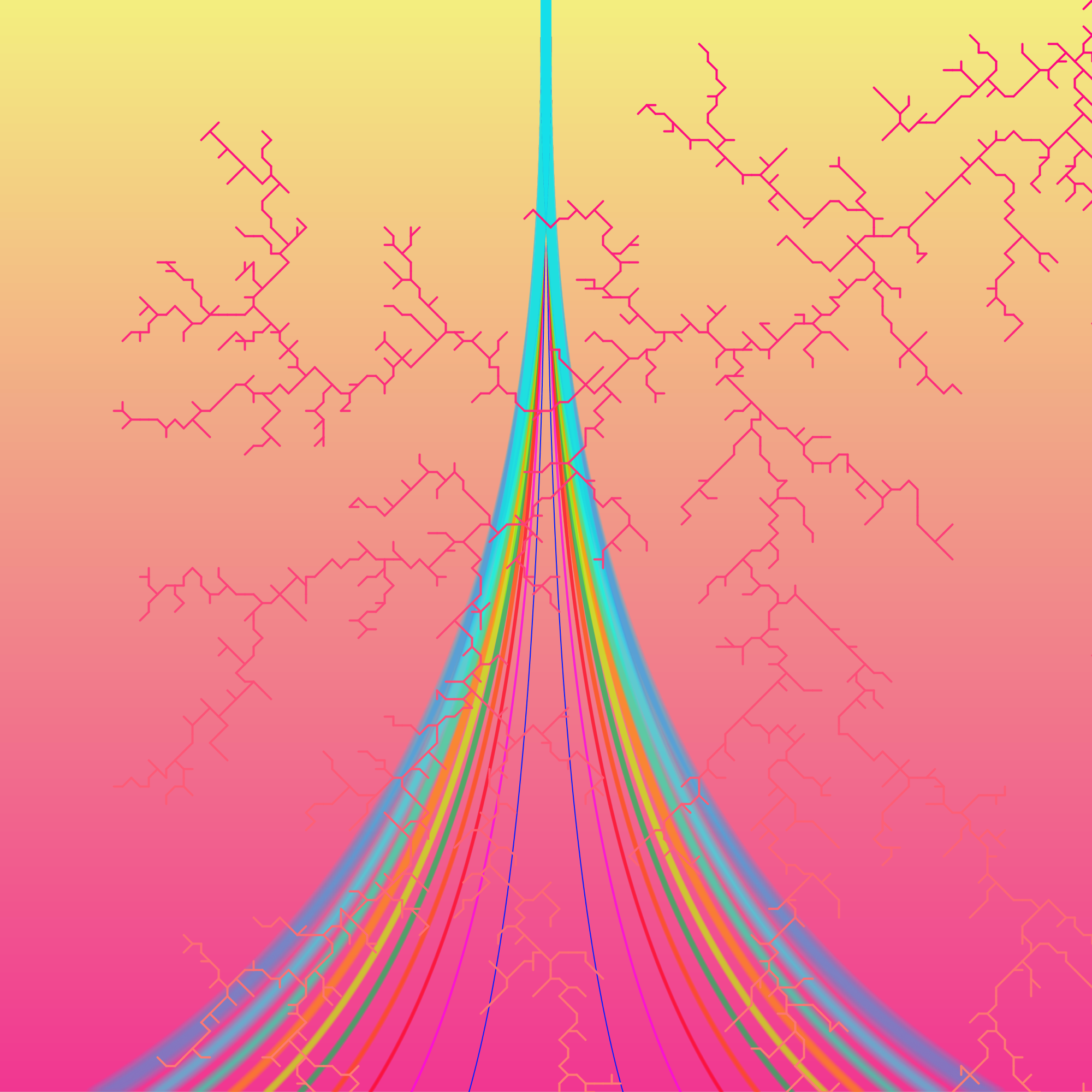

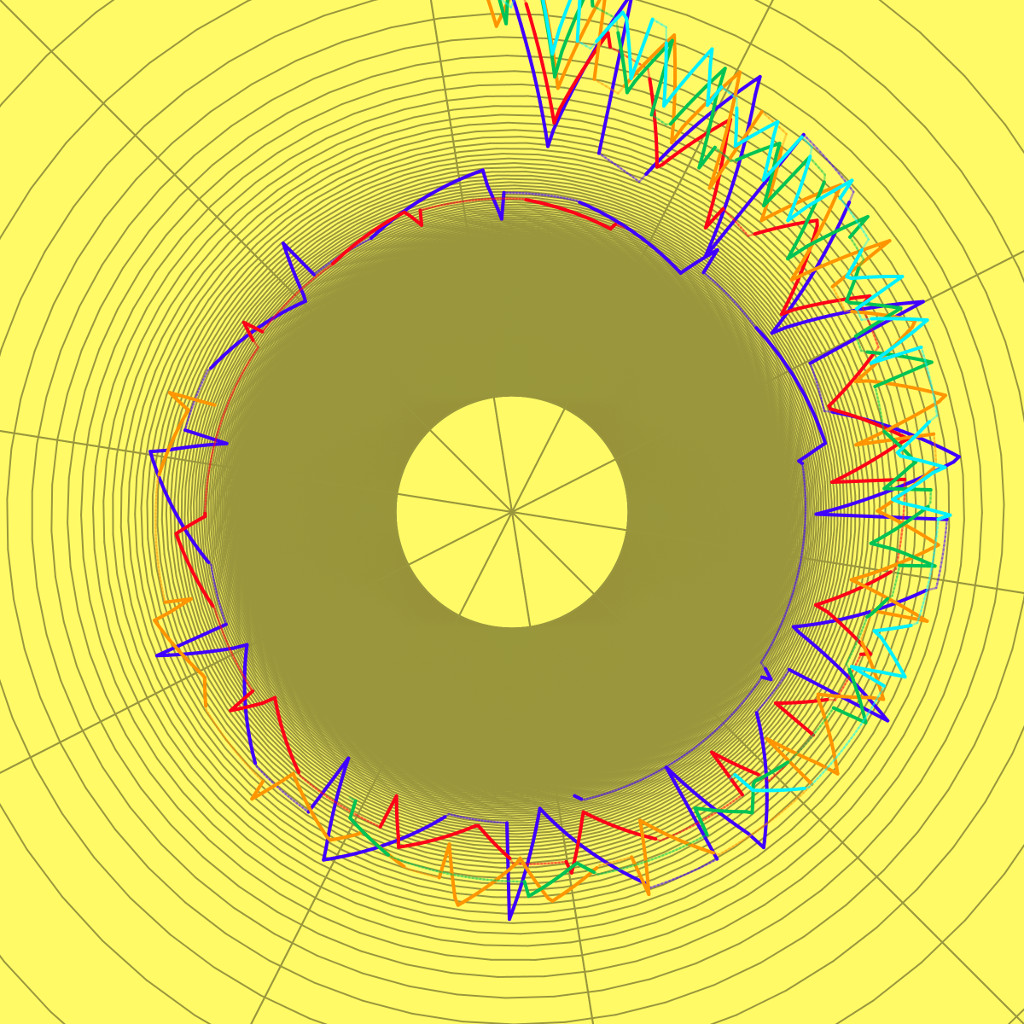

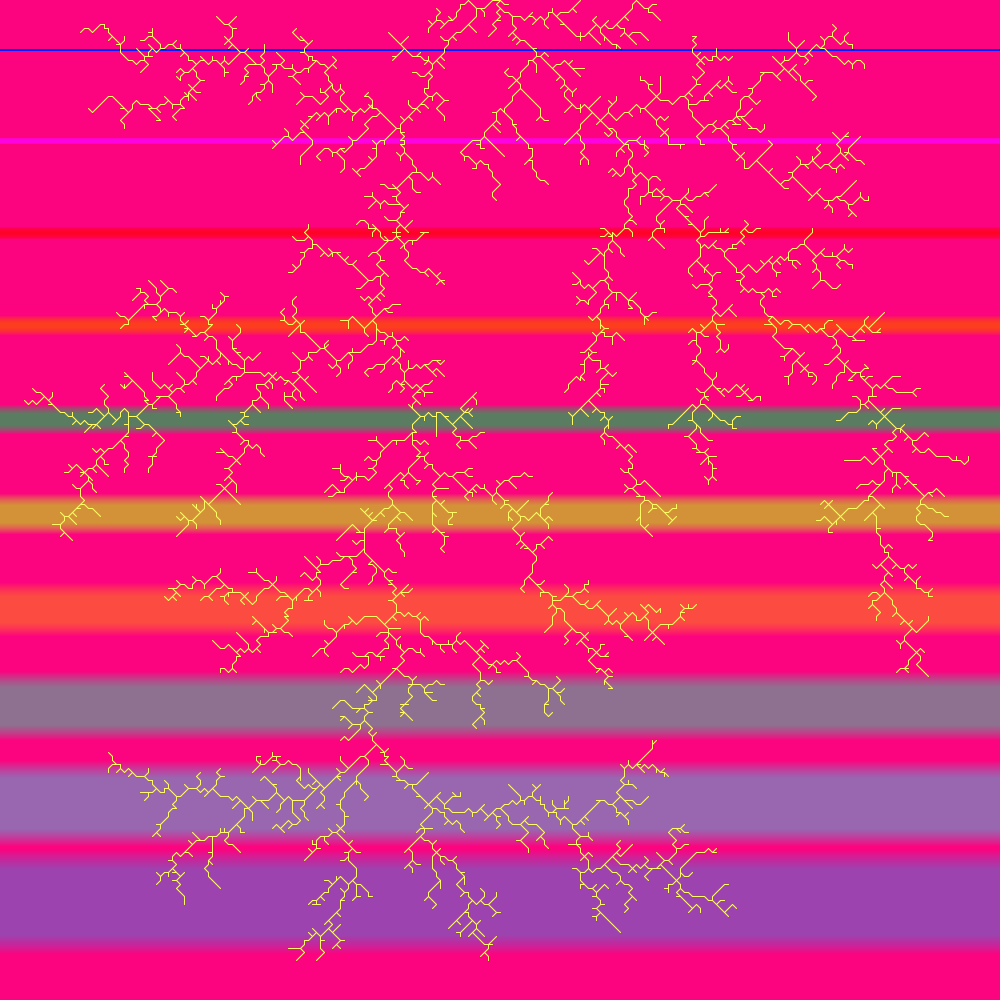

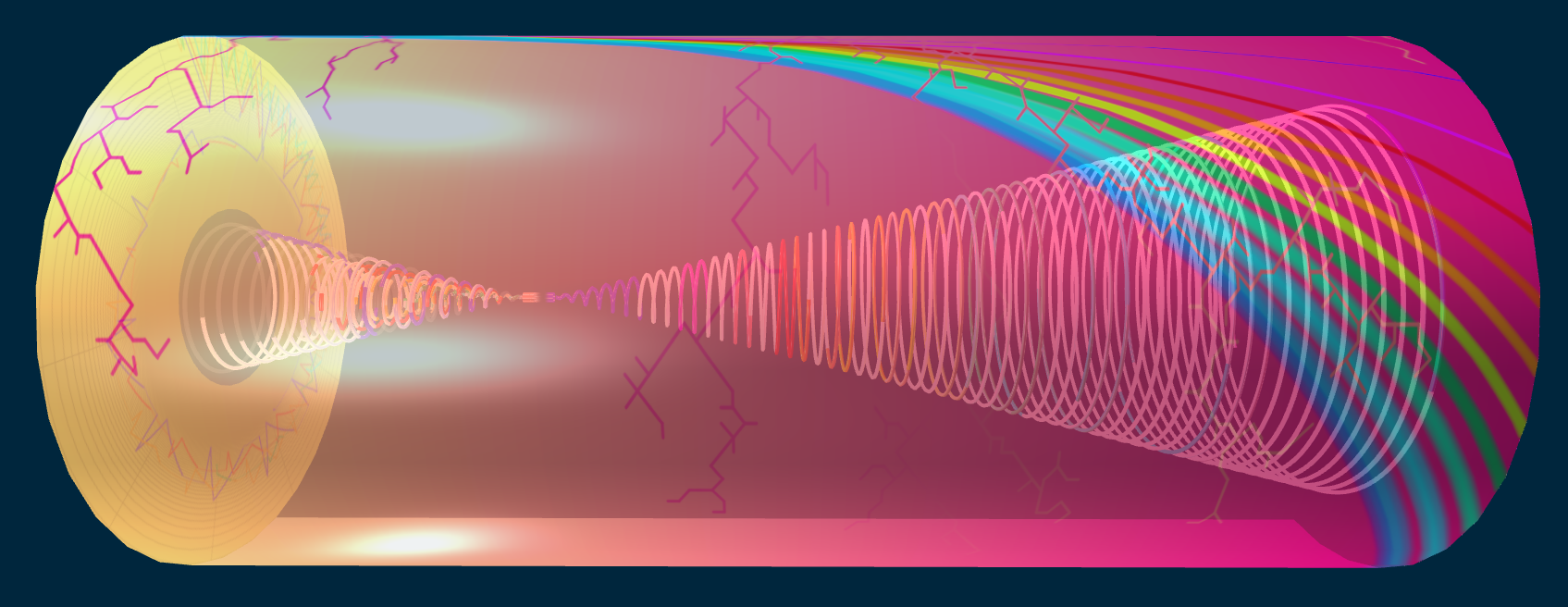

This was a project to visualize Gerard Grisey's Talea based on analysis by Jerome Bailliet and interpretation (and original design) by Anne Sophie Andersen. The project makes use of Three.js, Processing, and p5js for the visuals and Python for some data processing. All of the 3d objects are generated by Three.js in real-time from JSON data converted from CSV data (by Python). The cylinder side and end pieces are textured by 2d images generated in Processing and p5js. Some of the textures are characterized by root-like patterns generated by a diffusion limited aggregation algorithm. Hovering over any of the 3D model's structural elements populates an overlay with information about the music it represents. Through Vimeo's Player API (actual player hidden from view), the user is listen to a recording of the music corresponding to each strucutral element (just large parts for now, will work on attaching more specific parts to specific elements).

Our work was presented at New Interfaces for Musical Expression (NIME) 2021 as a full paper which can be found here alongside a short presentation video. The model can be found here and the code repo can be found here.

Web technology was chosen to realize the model due to this wide availability (anybody with an Internet connection can access the model, although the mobile version needs a bit of work) and also due to the ease of collaborating from distant locations (California and Denmark). The basis of analysis on pitch, rhythm, and structural processes (and not on traditional harmonic chord progressions or Western formal analysis) (hopefully) brings the music of Grisey to a wider audience than traditional music academia audiences.